RAPIDS and Amazon SageMaker: Scale up and scale out to tackle ML challenges | AWS Machine Learning Blog

Achieving 1.85x higher performance for deep learning based object detection with an AWS Neuron compiled YOLOv4 model on AWS Inferentia | AWS Machine Learning Blog

Bring your own deep learning framework to Amazon SageMaker with Model Server for Apache MXNet | AWS Machine Learning Blog

How to run distributed training using Horovod and MXNet on AWS DL Containers and AWS Deep Learning AMIs | AWS Machine Learning Blog

Evolution of Cresta's machine learning architecture: Migration to AWS and PyTorch | AWS Machine Learning Blog

AWS and NVIDIA to bring Arm-based Graviton2 instances with GPUs to the cloud | AWS Machine Learning Blog

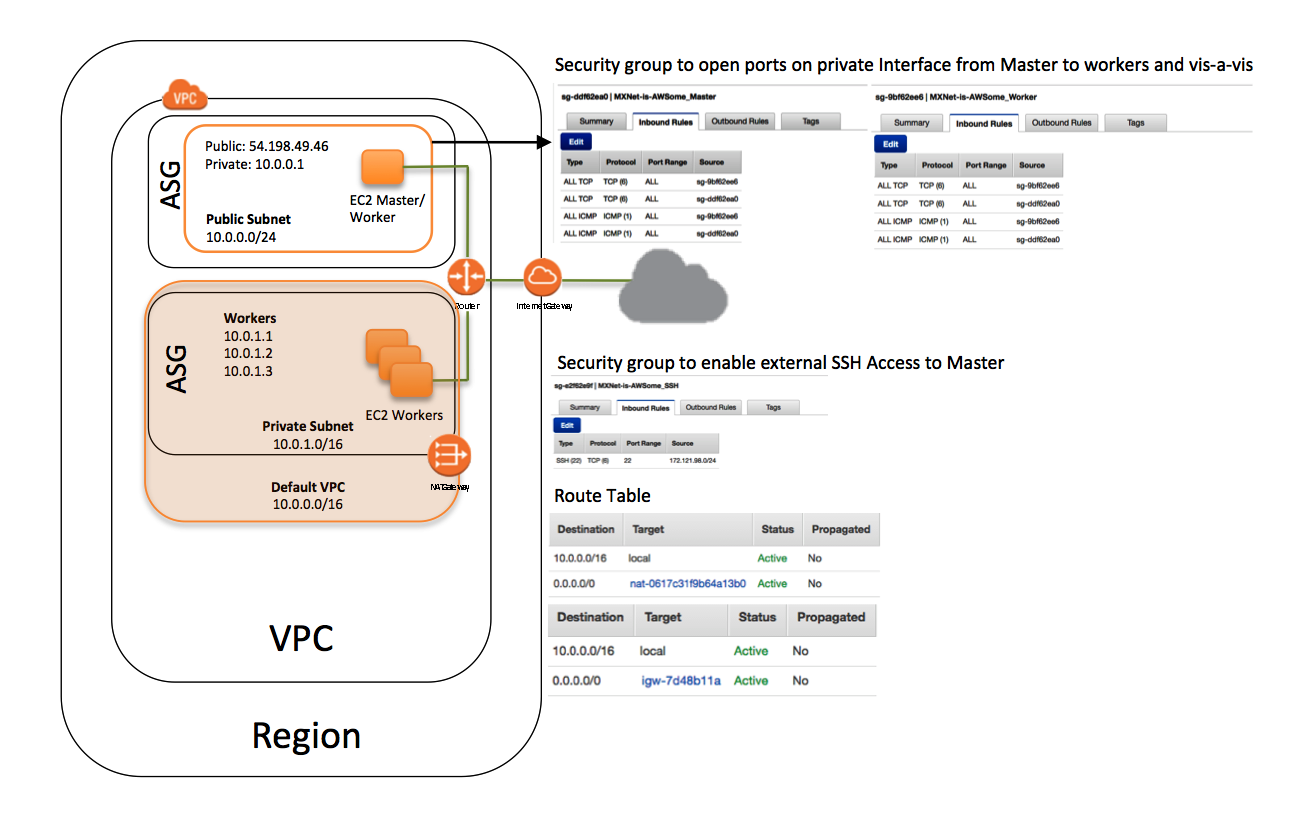

How to run distributed training using Horovod and MXNet on AWS DL Containers and AWS Deep Learning AMIs | AWS Machine Learning Blog

Amazon Elastic Kubernetes Services Now Offers Native Support for NVIDIA A100 Multi-Instance GPUs | NVIDIA Technical Blog

Hyundai reduces ML model training time for autonomous driving models using Amazon SageMaker | AWS Machine Learning Blog

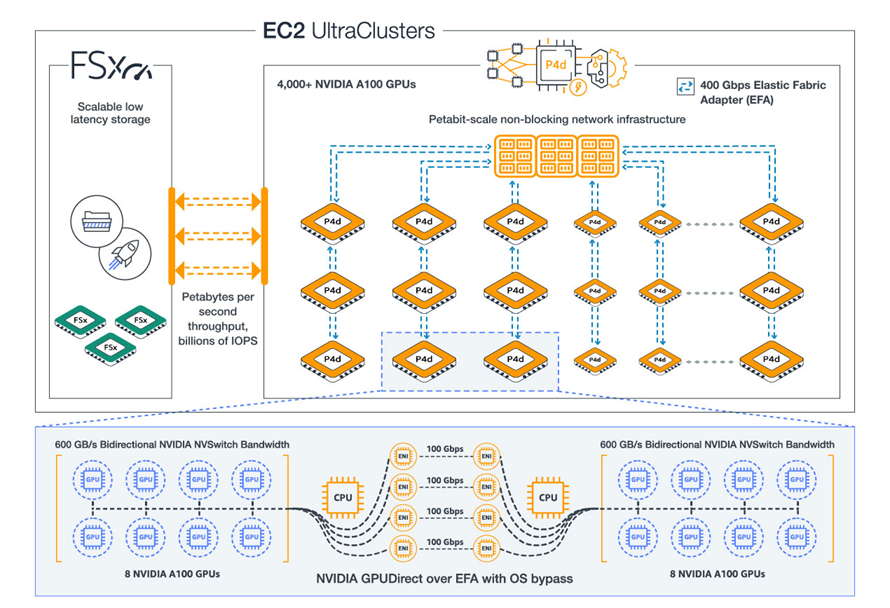

Multi-GPU distributed deep learning training at scale with Ubuntu18 DLAMI, EFA on P3dn instances, and Amazon FSx for Lustre | AWS Machine Learning Blog

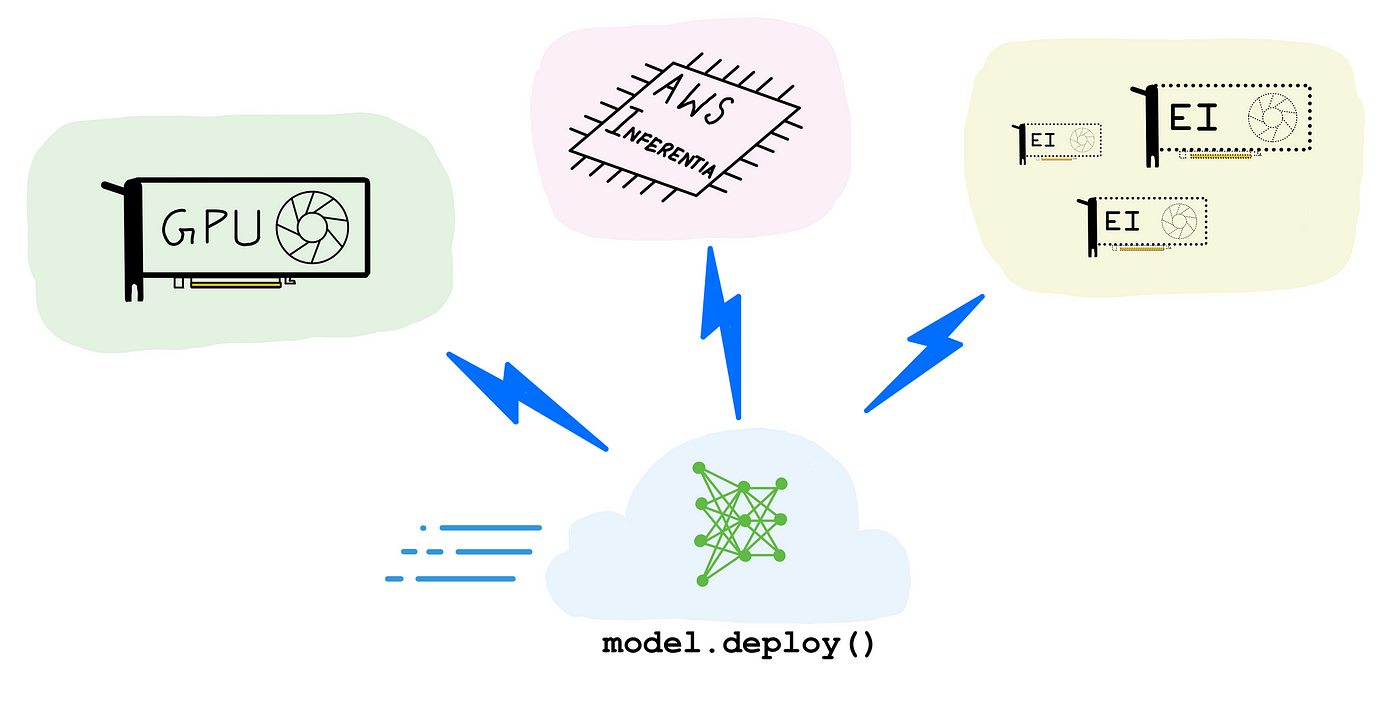

A complete guide to AI accelerators for deep learning inference — GPUs, AWS Inferentia and Amazon Elastic Inference | by Shashank Prasanna | Towards Data Science